|

AI/ML has the potential to deliver incredible value to customers. A single breakthrough can make a product company's growth soar. A fine-tuned model can completely transform an enterprise's operations. The mechanics of delivering such a program are very similar to traditional projects on the face of it, so it is tempting to think of the implementation as being the easy part. But because of the non-deterministic nature of AI/ML, all the pitfalls of ordinary software development programs - misaligned objectives, underestimation, lack of process, skimping on QA, ignoring risks, etc. - are amplified x10. To deliver successfully, you need to ratchet up the program diligence. Don't Oversell the Vision Before It's Proven. In many industries, sales is used to ‘selling’ the vision of the product in advance of it being built, and customers assume vapourware by default. No one bats an eye because we’re accustomed to the idea that engineering will always be able to fulfill whatever we’re selling, given enough time and money. AI/ML however is a world of possibilities, but many of these possibilities may not actually be able to be implemented in practice, even with a huge budget. It's easy to promise "The product will automatically predict X with high accuracy." where X could be anything from detecting a security breach to predicting stock prices to finding the perfect outfit for you wear. But even if the prototype is already 70% accurate, it may never get to 80%, or whatever you need it to be to be commercially viable. Don't oversell the vision, be honest. Be honest with customers, and be honest within the company walls too. Imagine slightly implying or exaggerating results to your manager, who then implies or exaggerates a little further to their manager, etc. and pretty soon the board is hearing that the product is 110% accurate and can slice bread too! Be Absolutely Clear: Is This a Prototype or a Commercial Product? When starting a traditional project with new technology, it’s common to start with a prototype. Although best practice is to "throw away" that prototype and start fresh on the commercial development, management often pushes teams to continue work directly on the prototype to turn it into a commercial product, skipping the throw away step. The result is a shaky development foundation that can result in code debt and bugs for the lifetime of the product. Far from ideal, but it can be liveable in a business sense. With AI, skipping the throwaway step is no longer possible. When a data scientist is prototyping a model, their focus is truly to validate that the product could ever have a chance of being delivered, and if not, what other related things it could do instead to deliver value. Prototyping AI involves many shortcuts - working on a "clean" data set (artificially clean, biased, small sample), where the availability and timing of the data may not be an issue. The work is done on a Jupyter notebook or other simulated environment that is vastly different from a real-world environment, and using models and techniques that could never be used in a commercial context. The business problem itself may be fluid, as the data scientist may realize that the algorithm can't solve X but can solve Y instead. When the prototype has met its objectives, a new project should be started from scratch for the commercial development. In commercial development, the team's work is focused on entirely different things such as:

So, is the project a prototype or a commercial endeavour? Alignment from the team to upper management is critical. The answer can't be both. R&D Must be Informed by Business Requirements From research and prototyping to commercial development, AI/ML requires making decisions and trade-off's. This is where the PM needs to provide loads of context to the R&D team: what are the customer problem(s) are that we are trying to solve? What parameters and trade-off's would be acceptable to a customer? Have we considered the cost trade-offs of operating in practice? For example:

From a data scientist's point of view, whether a model is sufficient to support the business case might be a higher bar than whether the model is a good model. Clear business strategy and customer context upfront can help guide the direction to take the development (kill the research and start fresh, re-focus it, etc.) Test environment vs. Real-world environment Skimping on QA strategy, test environments and real-world testing are classic software development pitfalls. With AI/ML programs, again this problem is amplified. The biggest challenges in commercial success lie in the substantial delta between the test environment and test data, and the highly variable nature of the field. Pitfalls include:

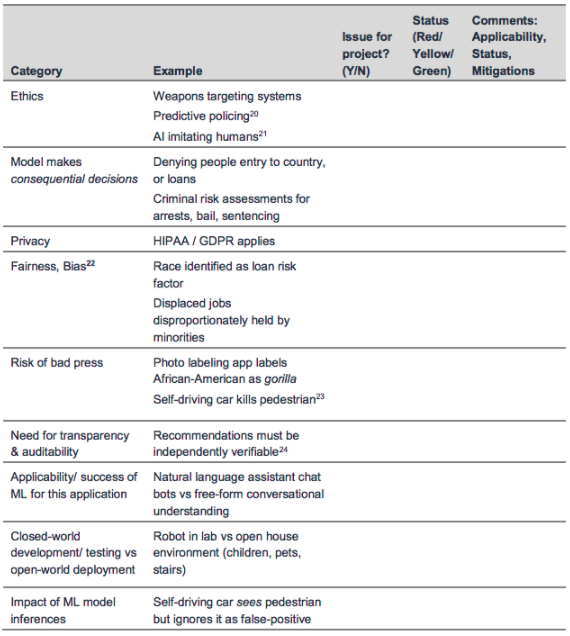

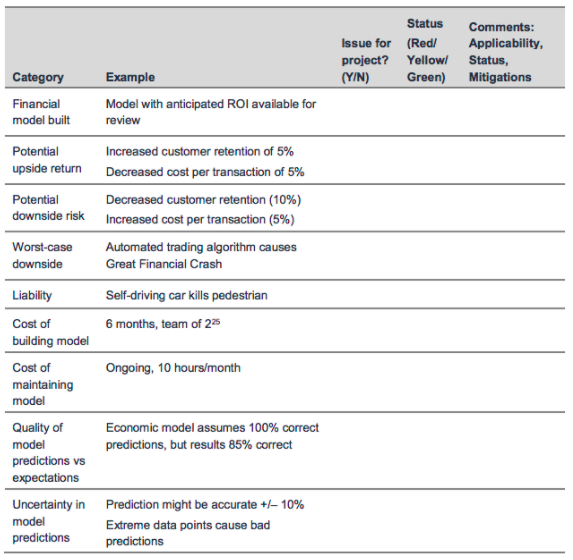

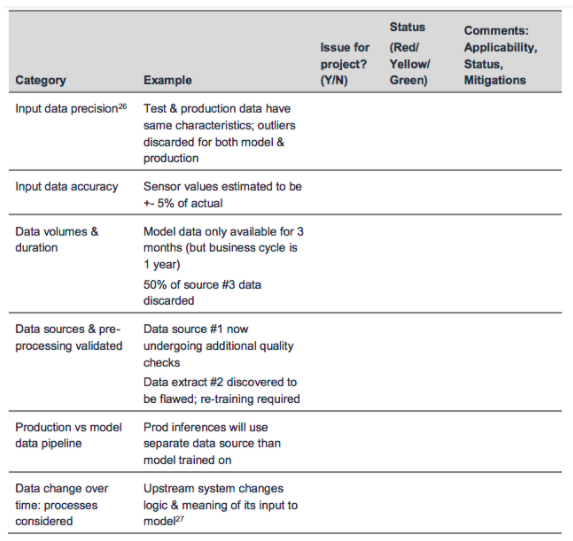

Careful modelling of the different data sources and their attributes - features, availability, timing, anticipated errors and drift - can lay the foundation for the right QA strategy what to test, where to test, and how to judge success. Risk Management Unless you're working at a big bank or large enterprise, PMs don't spend near as much time on risk management as PMI would say. In fact, if you spend too much time talking about risks in a small company, and you get pegged as a dissenter that doesn't believe in the company's success ("Why are you talking about risks? You don't believe we can make it happen?") In Amazon's excellent paper Managing Machine Learning Projects, the author lives and dies by scorecards for all facets of the program - go-to-market, financial, data, etc. PMPs will recognize these scorecards as simply actionable risk registers. Here's an example of a scorecard for go-to-market, which the program manager would continuously to track and use to direct progress and align the organization: Example scorecard for financials: Example scorecard for the data aspects of the program: Starting from canned scorecards like these is recommended, since the list of risks is pre-populated based on others' lessons learned. Then expand on them with your own project-specific risks. Give the team the big picture In AI/ML programs, once production starts, research usually continues in parallel. Managing both as one cohesive team is a new challenge for traditional program managers. From a process perspective, sometimes the method of communicating the requirements from research to production is by simply giving them the researcher’s Jupyter notebook, or a set of Python or R scripts. If the prod team redevelops and optimizes the code for production while the research team continues from their base notebook, you have the problem of versioning the code and identifying changes. From a human perspective, it can be easy to assume that because the individual team members are often highly educated and experienced, especially data scientists who may have a PhD. Nevertheless these are still just people, and people see the world through their own lens until the manager gives them the big picture. To ensure a well-oiled research and production machine:

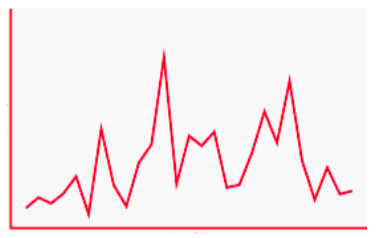

Don't Assume Linear Progress Finally, and perhaps most obviously, because of the non-deterministic nature of AI/ML development, achieving results usually does not happen as a set of linear steps that can be measured. The model may jump from 50% to 70% accuracy quickly, but getting to 80% may take much longer, or not be possible at all. Or to get there, the team may have to scrap some of the work and go back down to 50% before it can go back up. Similarly, with one data source the algorithm might be highly performant and accurate, and with another it suddenly drops. Metrics-focused management teams who are used to linear progressions can find these spiky results jarring. Metrics need to be part of the equation, especially before making any commercial release decisions, but they need to be accompanied with qualitative insight, lots of communication, and flexibility to pivot from the original plan if things are not progressing as expected after a certain time. Amazon against provides tools to help management visualize progress and plan contingency, but ultimately for any organization that hasn't undertaken AI/ML before, there's no panacea. This is culture change, plain and simple.

AI Programs - New Diligence Required Back in the 1970s and 80s, software development was a sort of "black magic", where both benefits and risks could take you by surprise, forcing management to plan carefully. We have since made leaps and bounds in the maturity and predictability of our software engineering practices, to the point that we take for granted that anything can be engineered given enough time and budget. In a sense, AI takes us back to those early days where anything was possible, but nothing was to be taken for granted. The risks are high, but in a way. that's a good thing - it should increase the maturity of our management processes and responsibility.

1 Comment

6/23/2022 11:45:04 am

What an exquisite article! Your post is very helpful right now. Thank you for sharing this informative one. If you are looking for coupon codes and deals just visit coupon plus deals dot com.

Reply

Your comment will be posted after it is approved.

Leave a Reply. |