|

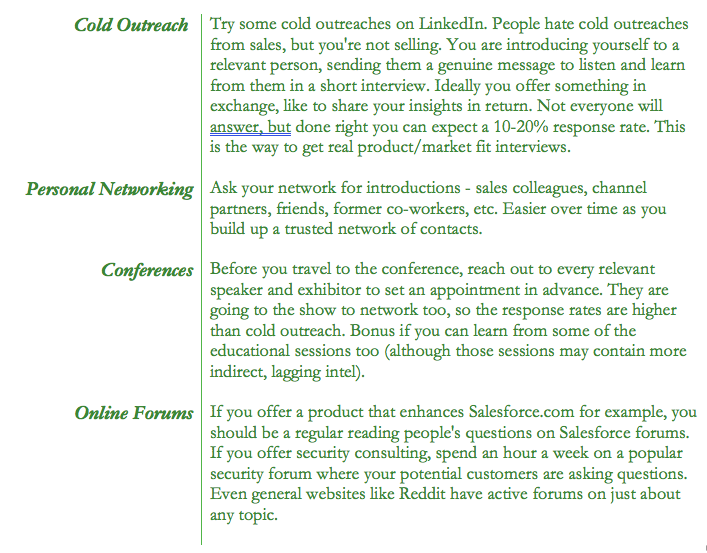

Product leaders are inundated with data. SaaS product and website analytics can slice and dice every aspect of the customer journey. I can see that 22% of my customers between the ages of 30 and 40 spent over 3.2 seconds looking at the new graphic on my website. I know that 16% of freemium users converted to paid in the last month since we added 3 new features. Quantitative measures like this can point to areas of interest that require investigation and experimentation, but they won't tell you why these are of interest. Qualitative data, ie. talking to people, gives you the why. Qualitative data tells you what was motivating the user when they spent 3.2 seconds looking at your graphic, what problem they were trying to solve. You need both, quantitative and qualitative. Multiple Researchers Examining The Same Data Find Very Different Results In a recent news story, twenty-nine research teams in the scientific community were given the same data and each group was asked to conduct an analysis of data. The question was relatively straightforward—are soccer referees more likely to give red cards to players with darker skin than to those with lighter skin? The answer, on the other hand, was not as straightforward. Twenty teams (69%) found a significant effect (referees were more likely to give red cards to darker-skinned players), while nine teams (31%) found that there was not a significant effect (referees did not appear to discriminate according to skin tone). Even amongst the researchers that found a positive result, the effect ranged from very slight to very large. So how did these researchers—looking at the exact same data—arrive at such different results? The different decisions made during this process, including what other factors to control for, what type of analysis to run, and how to deal with randomness and outliers in the dataset, all lead to divergent conclusions. In other words, they all interpreted the data and the context around it differently. If even professional researchers can derive wildly varying conclusions from data, surely product managers and growth marketers - who are at best armchair researchers - are likely to fall into the same traps. But... We’ve Gathered Tons of Data... If you gather enough data, meaningless patterns will emerge. Simple example: if you ask enough people what month they were born in and a hundred other research questions, you will “discover” things like people born in November drink a disproportionate amount of Budweiser compared to everyone else, or that 86% of people with July birthdays like driving foreign cars over North American cars. You haven’t actually discovered anything except a statistical phenomenon known as clustering. In a large set of random data, you get clusters of the same type of information. Roll a pair of dice 100,000 times and you’ll get strings of snake eyes or 12’s and other unlikely sequences. It doesn’t reveal a strange meaningful pattern. There are just a lot more clustered patterns than non-clustered possibilities out there. To find out if the trend has meaning, you would have to run a separate follow-up experiment. Automatically assuming that a cluster of data has meaning — and making design decisions based on that — can lead you astray. For example, a client once asked us why their website was so popular on Tuesdays, as shown by their analytics data. It didn’t make sense, so we asked them to redo the test. In the second test, the pattern disappeared. The same is true for old-fashioned survey data. You can see patterns emerge that may be meaningless, or that point to potential areas of interest that require further investigation, but don't actually give you any real conclusions. Product leaders sometimes sometimes get swayed by the sheer volume of data being “statistically significant,” or believe they have a meaningful result because something is “statistically significant.” Large amounts of data demand a certain amount of rigour to prove statistical significance, however, when it comes to testing how users actually interact with the system, their preferences and motivations, statistical significance is far from a sufficient criterion for meaningful or proper research on its own. For any quantitative measure, your biggest asset is knowing the right questions to ask. Start with a theory that you want to prove or disprove (your hypothesis, then use analytics and surveys to steer more detailed user research). For example, you could use SaaS analytics or a survey to get a high-level sense of preference for a feature, then use more qualitative methods like user interviews to understand that preference with the appropriate context and detail. Misuse Of A/B Testing Sometimes design questions end up in an internal debate between proposed solutions. The lead architect is convinced the features should look or function one way, and the product manager has a different theory. A situation like this can be a good candidate for A/B testing, a fast form of user research where you launch a few designs to different user groups, perform some testing, and compare the results. A/B testing measures which of several designs produces the most conversions, fewest clicks, fastest time, most intense emotional response, or whatever metric you decide to measure. Online testing tools take advantage of crowdsourcing to make this process even more convenient. But you need to be aware of the drawbacks of A/B testing. First, you have to rely on your best guess as to the real reason why Design A performed better than Design B. You don’t get any feedback on whether or not the user “gets” the system (this makes it hard to stay in the problem space.) Also, you can inadvertently commit yourself to a non-optimal solution. Incremental A/B testing finds the solution that, relative to other presented solutions, produced the best results. However, because you always test one solution against the others, you run the risk of getting stuck with the best you’ve got, not the best possible solution. A/B testing should be used as a quick cheat, a complement to other forms of research like the contextual interviews, concept walkthroughs, and usability testing. To Really Understand, Interview Customers and Prospects Regularly Yes, salespeople talk to customers, but their context is making a sale. It's not about gaining insight into urgent, pervasive customer needs. There's a growing consensus that the sales view on customers is important but incomplete. Someone else - marketing, product management, growth hackers, whatever you want to call them - a designated person or department must have a process of methodically surfacing the voice of the customer through exploratory interviews. Pragmatic marketing defines 3 worlds: 1) your actual paying customers 2) your interested prospects 3) people in your target markets who have never heard of you. Companies that talk to their customers usually talk to group 1), have sales-only conversations with group 2), and often miss 3) entirely. But you need data from all these sources to get a complete picture of product/market fit. So how will you get in front of all the other potential customers (prospects, competitors' customers, and people in your target market who have not heard of you)? The most talented PMs I know are mini-entrepreneurs that pride themselves in building up a network of these potential customers to talk to. Here are some suggestions: Understand their "Why"

Quantitative measures like this can point to areas of interest that require investigation and experimentation, but they won't tell you why these are of interest. Qualitative data, ie. talking to people, gives you the why. Qualitative data tells you what was motivating the user when they spent 3.2 seconds looking at your graphic, what problem they were trying to solve. You need both, quantitative and qualitative. In The Complete Guide To Customer Interviews that Drive Product-Market Fit, we delve deeply into how to plan and execute customers interviews that get to the why, the root cause that motivated them to click on a button, to sign up, to convert to paid. Rule #1 is to keep the conversation in the "problem space". What problem or pain point was the customer looking to solve? You may need to ask this many times over, and in different ways. Keep asking "why?" until you understand the underlying problem that they are trying to solve. Further reading:

0 Comments

Your comment will be posted after it is approved.

Leave a Reply. |